#!/bin/zsh

LOGFILE="/var/log/nhttpd/user-agents.log"

BAD_AGENTS=("sqlmap" "nikto" "acunetix" "dirbuster" "wpscan" "fuzz" "nmap" "masscan")

BLOCKLIST="/tmp/blocklist.txt"

# Extract suspicious IPs

for agent ("${BAD_AGENTS[@]}") {

grep -i "$agent" "$LOGFILE" | awk '{print $1}' >> "$BLOCKLIST"

done

# Remove duplicates

sort -u "$BLOCKLIST" -o "$BLOCKLIST"

# Block IPs

while read -r ip; do

if ! iptables -C INPUT -s "$ip" -j DROP 2>/dev/null; then

iptables -A INPUT -s "$ip" -j DROP

echo "Blocked IP: $ip"

fi

done < "$BLOCKLIST"

Automated Analyzing and Blocking of suspicious User-Agents

☰ Section: Security | ⚀ Posted: 2025-04-29 02:27:04 | ⚀ Updated: 2025-04-29 02:27:04 DALL-E via ChatGPT - powered by OpenAI

DALL-E via ChatGPT - powered by OpenAI

Mastering Web Server Security

When it comes to web server security, especially protecting a system against unwanted visitors is like a battle in a never ending war. Attackers are constantly knocking on our doors, testing for weaknesses, and trying to slip through unnoticed. While firewalls and security modules provide a solid first line of defense, significant advantages come from actively monitoring the behavior of clients or so called bots connecting to our server — especially by analyzing their User-Agent strings. Malicious bots, vulnerability scanners, and early-stage attackers often leave distinct traces in our access logs, long before they succeed in exploiting a system.

One powerful (and often underestimated) tool we have at our disposal is the set of our server's log files. Hidden within all those lines of requests are important clues about who's visiting us — and sometimes, those clues can help us catch bad actors before they even get close to causing real damage.

If we want to stay ahead, we need to be proactive — not just reactive! In this article we'll walk through how we can automatically detect suspicious User-Agents in our server logs and immediately block the offending attacker's IP addresses by updating the system's firewall rules, all without human intervention, without having to babysit the server manually.

In regard to a good work/life balance the goal is to minimize exposure time, harden the server proactively and having enough spare time to do these things in life that are really worth it.

So let's dive in together!Analyzing Log Files for Suspicious Activity

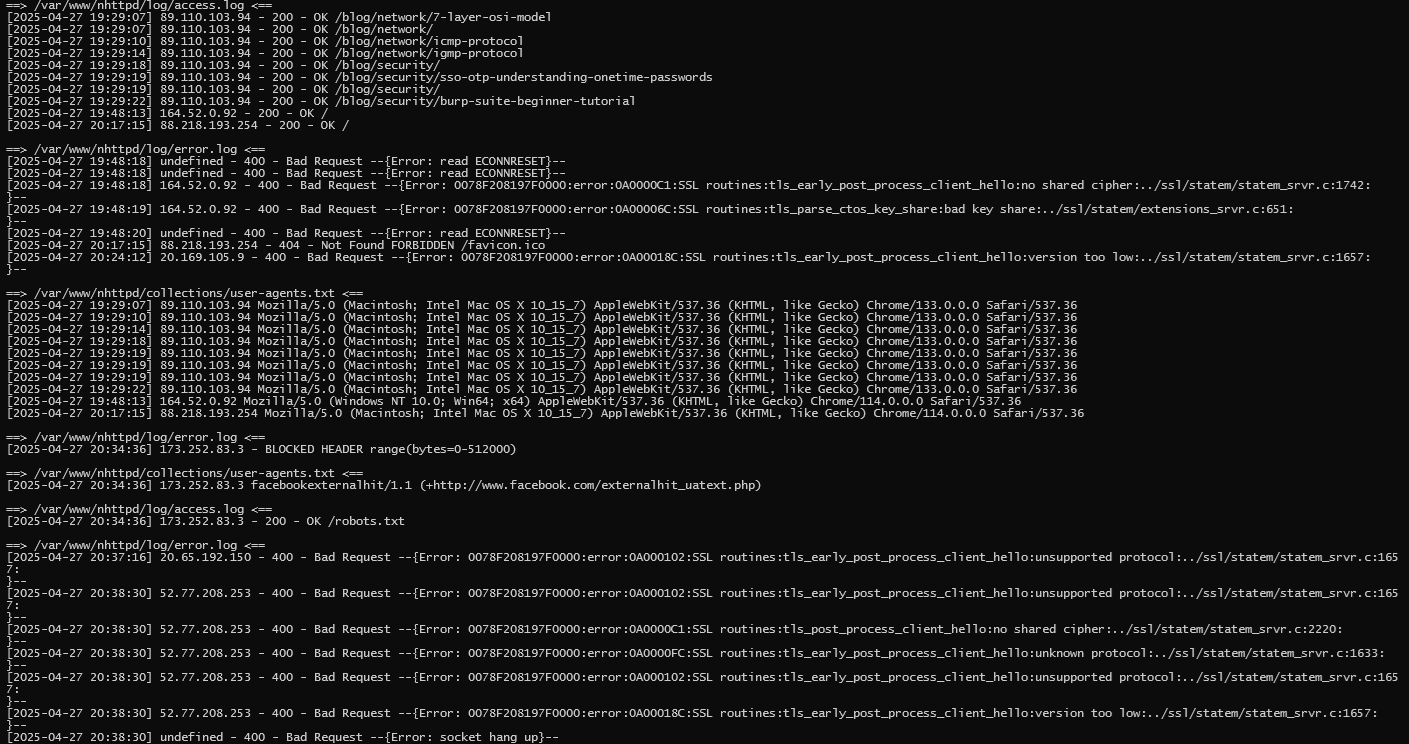

Every single request to our web server tells a story. Along with details like the requested URL and the HTTP Status Code it is typically also recording crucial information about the user's web browser, the User-Agent string. A piece of information that reveals what client the visitor claims to be using. Legitimate browsers follow predictable patterns, whereas malicious actors often use outdated, missing, or intentionally misleading User-Agents.

Most of the time, we'll see harmless, familiar names in our logs — browsers like Chrome, Firefox, Edge, or Safari, and Crawlers like Googlebot. The image shows an example of a legitimate Chrome browser installed on a MAC OS X.

So, what will we find?

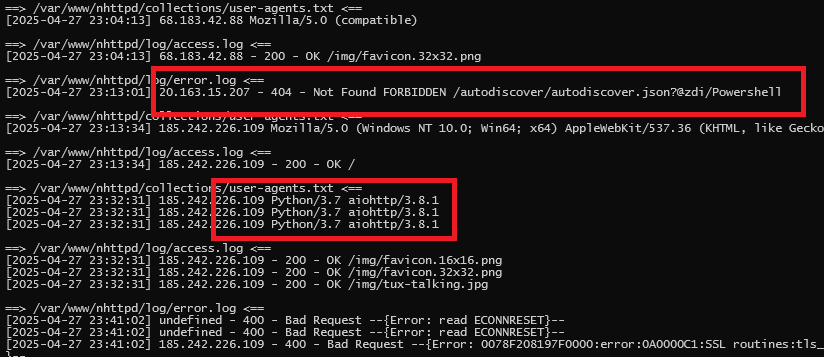

Sometimes something weird shows up like we can see in the following box.

The nginx example hints at automated vulnerability scanning and when we spot entries like this, it's a clear red flag. sqlmap is a well-known penetration testing tool used for automatic SQL injection attacks. Legitimate visitors simply never announce themselves this way. By paying close attention to these kinds of clues, we can start building a reliable way to tell friends from foes.

Note that Chrome always identifies as version xxx.0.0.0. This is a convention after that the manufacturers of web browsers mask their real version for a reason. Google calls this 'User-Agent Reduction' and aims to prevent tracking.

How We Recognize Suspicious Patterns

Not every odd User-Agent means trouble — but certain patterns are just too suspicious to ignore.There are several indicators and over time, we'll get a feeling for the most common signs, such as:

- User-Agents that directly mention hacking tools (e.g. sqlmap, nikto, dirbuster, acunetix)

- It is missing entirely (empty string) or contains obviously fake data (e.g., "bot", "scanner", "h4ck3r").

- It uses extremely outdated browser versions that are no longer common.

- Obvious giveaways like the words "scanner", "bot" or "fuzz" in the string.

- Extremely nonsensical User-Agents.

- Clients immediately probing sensitive or non-public endpoints (e.g., /admin, /phpmyadmin, /login).

Automating the Firewall Blocking

Now comes the fun part: instead of manually shifting through logs and copy-pasting IP addresses into firewall rules, we can automate everything. Once we have identified the attacking IP addresses, the next logical step is to block them. On a Linux system, this can be done using a simple script interacting with iptables (or nftables, depending on our setup).

By writing simple rules to watch for these signs, we can automatically extract the IP addresses that deserve our attention — and our firewall’s attention too. Here’s a basic Bash script that will scan our logs for bad User-Agents and block the corresponding IPs:

Best Practices and Tips

Before blocking anything, we start by watching. Whenever a suspicious User-Agent is detected, we don't immediately deny access. Instead, we record the request, the headers, and the IP address – carefully noting every move.

By continuously analyzing our access logs and reacting in near real-time, we can drastically reduce our attack surface. Automated blocking based on suspicious User-Agents is a lightweight yet powerful method to prevent common vulnerability scans and reduce noise in our logs, freeing up resources for detecting more sophisticated threats.

As always, we should remember that no single measure provides complete protection. Defense in depth — multiple layers of security — remains the best strategy against determined attackers.

- Use more sophisticated log parsers.

- Only block after multiple suspicious requests (to avoid false positives).

- Log blocked attempts for auditing purposes.

- Regularly clear expired blocks.

By running a script like this regularly — maybe via a simple cronjob — we create a dynamic, evolving shield around our server that adapts to new threats automatically. And don't worry: this is just the beginning. As we grow more confident, we can make our detection logic smarter, add whitelisting for known good IPs, or even implement automatic expiry for blocks after a cooldown period.

Conclusion

Our approach has two major benefits:

- It helps us avoid unnecessary false positives.

- It keeps our web server logs clean and focused on real threats.

When it comes to server security, we can’t afford to be passive. Attackers are fast, but with a little automation on our side, we can be even faster. Over time, we manually build a whitelist of friendly crawlers and known bots that are allowed to roam freely. Everyone else who behaves suspiciously or tries to flood our server will eventually find themselves permanently blocked. Because in cybersecurity, it's not about reacting fast – it's about reacting right.

By working with our access logs, recognizing suspicious behavior early, and automating our responses, we stay one step ahead. It's a simple but powerful way to harden our defenses — and it feels great knowing that our servers are actively protecting themselves, even while we sleep.

DALL-E via ChatGPT - powered by OpenAI

DALL-E via ChatGPT - powered by OpenAI

Let's keep improving our defenses together — one log file at a time.

Have lot of Fun ...

.m0rph

-----BEGIN GEEK CODE BLOCK----- GIT e+ d-- s++:++ a++ G++ C+++ M-- W+++ L++++>$ P+++ E--- W+++ N++ o+ PS+++ PE+++ Y++ PGP++ !DI t++ 5-- X++ R++ tv- b-(++) h+ r- y(++)- -----END GEEK CODE BLOCK-----